After yet another supply chain issue (npm this time, but it doesn’t really matter that much), Shai-hulud, 500 packages affected and millions of downloads later, I finally wrapped up the protection system for my dev environment. I really don’t want to be the next one exploited.

The package takeovers will continue to happen and it will only take time before I’m affected by accident. (and everyone else - skills don’t matter that much here)

What’s the threat model? A new package version gets published and I’m trying to update to it. Either installing it or running the code will run malware that steals my (or CIs, or repository’s, or …) credentials. The package manager will run custom compilation/installation code.

Since I need those credentials available in my service, I can’t just get rid of them. I can make things a little bit better though by ensuring only the required credentials are provided. For example a running service does not need the npm token, so it should be stripped or replaced in the env file. That’s both the easy part and one that still requires some action and not forgetting - not all services default to passing empty environments.

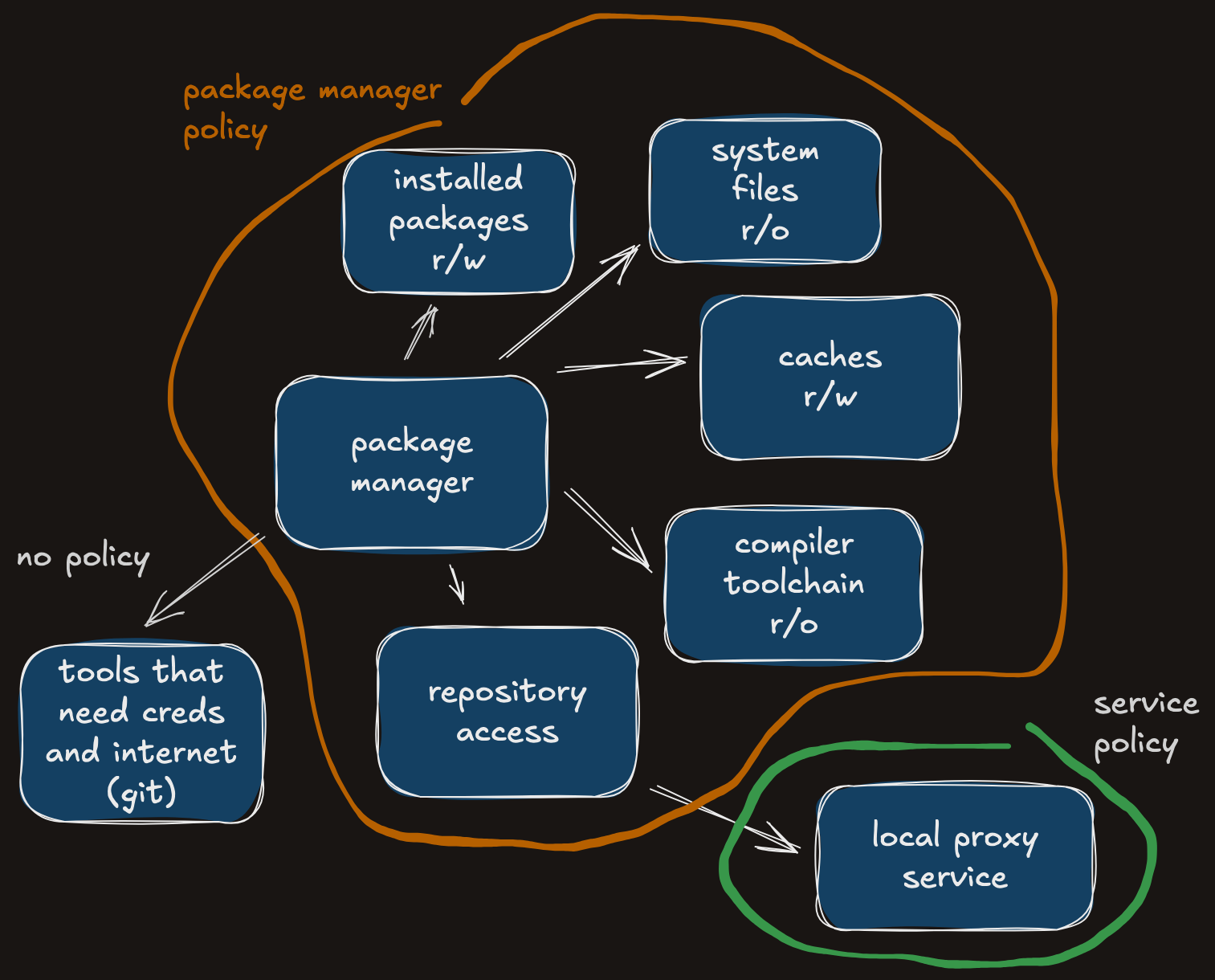

Now, lots of credentials will live in other places: the keychain, token vaults, project files, config files, etc. But neither npm nor the developed service has any business looking there. Any discovered credentials could be exfiltrated, but package managers shouldn’t be talking to the internet at all (apart from the package repository). So the big goal is to prevent both reading secrets and sending them out. On some systems it’s a reasonable task (Linux/selinux), while on others it’s obscure and obnoxious (macos/sandbox-exec). Let’s look at the mac version here.

Sandbox-exec is a tool that allows applying a custom policy of restrictions to a given application. It lets you set both default-allow and default-deny policies and make granular choices for specific objects like paths, connections, executables. In theory it’s deprecated, but there’s nothing that could replace it right now. (App capabilities are not even comparable) It’s also undocumented and hard to debug. Fortunately there’s enough examples (/System/Library/Sandbox/Profiles/) out there that we can construct what we need.

Starting with package installation using yarn (just an example - could be pip, cargo, whatever), the policy should prevent reading anything not relevant to the task. Configuration files and credentials should be protected from any access. Connections should only be allowed to the package repository. Unnecessary environment variables should be cleared (can be dropped in a wrapper before yarn starts). The necessary files can be collected by initially running yarn with (allow (with report) file*). This will list a bunch of files and devices which would generally be available anyway (/dev/null, system libraries, etc.), cache areas which need to be writable, project files which need to be readable and various utilities started by the main process (compiler, linker, make, coreutils, …). All of those can be listed as explicitly allowed in the policy, with some parameterisation for the home directory and project location. For example:

(allow file-read-data

(path "/private/etc/passwd")

(path (string-append (param "HOME") "/.npmrc"))

(path (string-append (param "HOME") "/.yarnrc"))

(path (string-append (param "HOME") "/.yarnrc.yml"))

(path (string-append (param "HOME") "/src"))

(path (string-append (param "HOME") "/src/" (param "PROJECT")))

(path (string-append (param "HOME") "/src/" (param "PROJECT") "/.tool-versions"))

(path (string-append (param "HOME") "/src/" (param "PROJECT") "/Gemfile"))

...

Typical targets

An alternative approach which takes less time would be to exclude the paths instead. That is, allow by default, but deny access to some elements. For example:

(allow file*

(subpath (string-append (param "HOME") "/.ssh"))

(subpath (string-append (param "HOME") "/.aws"))

(subpath (string-append (param "HOME") "/.git"))

(subpath (string-append (param "HOME") "/.zsh_history"))

...

This helps with the policy complexity, but leaves a lot of space for workarounds. It’s very likely that there’s some secret saved somewhere not in the list. Or it will be in the future, while the policy won’t be updated.

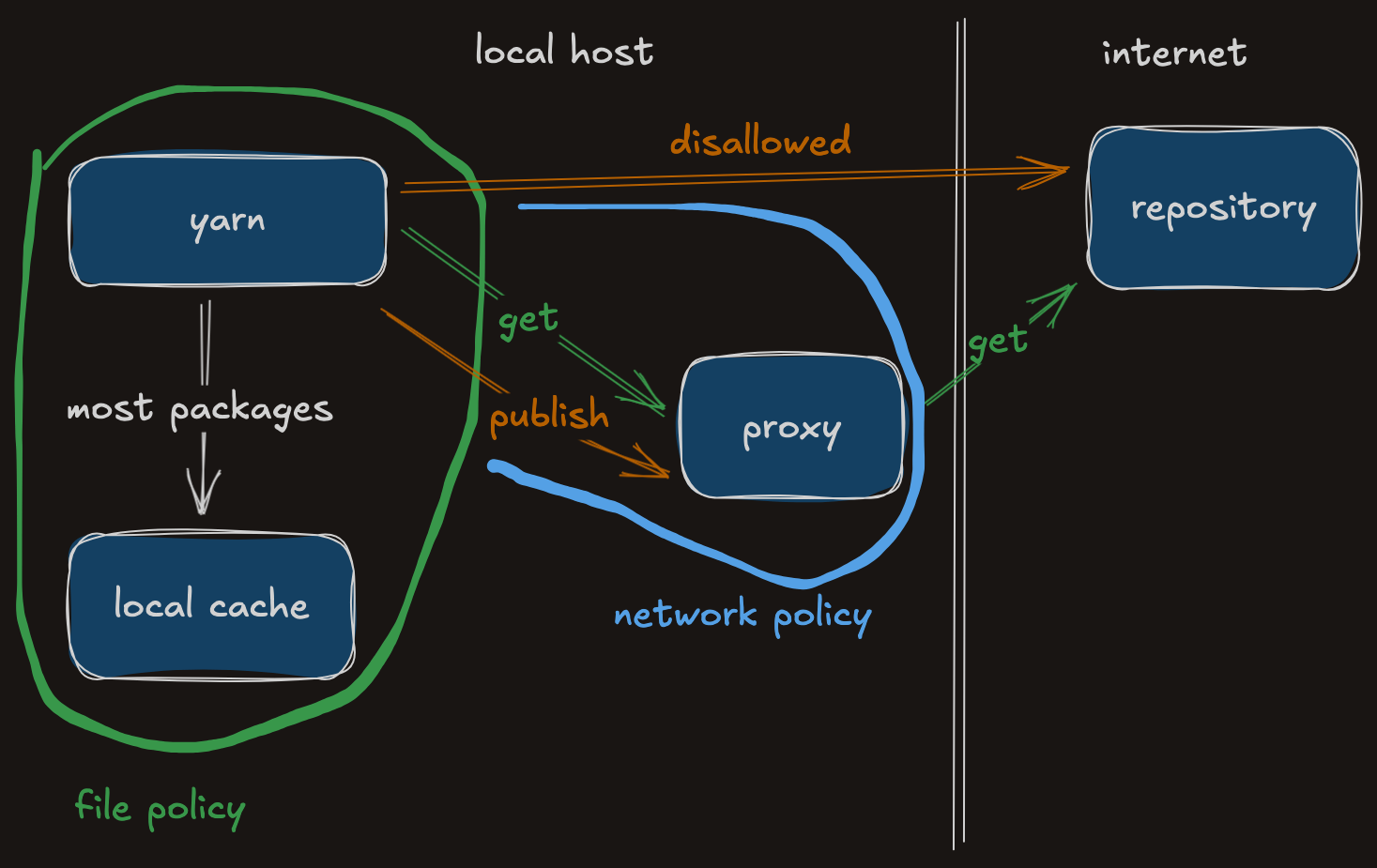

Networking

Unfortunately the networking part is harder. macos sandbox doesn’t allow specifying arbitrary IP addresses. You get the choice of “localhost” or “everything”. Even if the IPs were allowed, repositories hosted in cloud services would be mixed up with many other endpoints, so that wouldn’t help us.

So the only available solution is to run my own proxy for the service. We don’t even necessarily need caching since npm and yarn maintain their own. As long as the proxy listens on localhost with a specific port, we can restrict the connection to only that endpoint. This prevents almost all data exfiltration and any attempts to push updates to packages (in case the credentials are available somewhere).

(allow network-outbound (remote ip "localhost:9123"))

(allow network-outbound (literal "/private/var/run/mDNSResponder"))

Environment variables

The cleanup of the variables should be trivial in theory (just unset them), but… Some variables should not be passed down to the build processes, but they will be by default. There’s no way to do that cleanup in the the macos sandbox policy. So the only way is to use wrappers for specific commands, but… that’s not something people will have typically setup. If you’re using nixpkgs, it’s possible to replace most build commands with wrappers which unset the unnecessary secrets, for example all tokens when calling make. But that still leaves the system-installed tools which may be used via hardcoded paths.

So in practice this is far from trivial to actually apply to the whole system.

The app

Once the dependencies are safely installed, the app itself needs to be run. This means it needs do whatever custom stuff it does, which removes a lot of commonality and opportunities for templating. A lot of it will still be common to webapps (code reading, some cache and log writing, database access), but there will very often be some customisations.

Again, local paths and proxies to external services will solve most problems. But it’s all up to the developers of that app.

What are the problems

Over time the necessary files will change. New functionality in the package managers and apps will cause new behaviours. That will cause potentially weird faults until you realise to check the logs and update the policies. Updated packages will cause weirdness too. Maybe even a macos update will break the policy itself - who knows.

Many dev environments will be different in weird ways, making the policies really hard to share. For example some libraries may be installed locally, some via homebrew, some via nix, some bundled with npm packages. All of those will require different paths added and other things managed. This is from my policy, but it will be likely different for you:

(allow file*

(subpath (string-append (param "HOME") "/Library/Caches/Yarn"))

(subpath (string-append (param "HOME") "/Library/Caches/node-gyp"))

(regex (string-append "^" (param "HOME") "/.local/share/mise/installs/node/[^/]+/lib/node_modules/"))

(subpath (string-append (param "HOME") "/src/" (param "PROJECT") "/node_modules"))

Unfortunately with sandbox-exec you also can’t force an executed process to have a specific policy. It can be configured/changed from the parent app of course, but there’s no “yarn with this policy can start git with that policy” - only pass the current one or none at all. So there’s no good way of both preventing yarn from accessing .ssh/id_rsa and sandboxing git in a different way. We end up with the least bad:

(allow process-exec (with no-sandbox)

(regex "^/nix/store/[^/]+/bin/git")

)

There are also weird interactions with other apps. If your yarn is run through mise and Ruby is required for the project, you suddenly need the yarn wrapper to be able to read the local Gemfile.

Finally, all policies will leave some holes open. For example if git is allowed without the policy and the attacker knows about it, they can set the pager to a script saved in a cache directory and produce a long git log. Or exfiltrate data using custom DNS queries. Or in a thousand of other ways.

Who would use this solution.

Ok, so after a lot of custom work, my system is protected from trivial and not targetted (but that’s most) supply chain attacks. But who else would do this?

People who really care and take some weird pleasure in coming up with systems like that will probably be interested. The other 99.999%, probably not. Realistically, it requires a lot of work to maintain, a bit of deeper system knowledge and scripts for cleaning up things that policies can’t do. And for shared credentials (like the company’s package registry) if I don’t get hacked, one day someone else will.

Here’s my yarn install policy as nightmare fuel and an exercise to find exciting exploit workarounds for it.

At least selinux makes things much easier (but still famously convoluted) by using labels which make grouping things easier. Incorporating something like seccomp/pledge into the tools themselves could also be interesting, but is hard to maintain for the developers.

So, sadly - I don’t expect this specific approach will become popular. But maybe this example will poke one more person to think of improving things a little bit more. Many proposed solutions require changing the whole ecosystem (limiting dependencies, not compiling things, no custom building code) so have minimal chances of succeeding. Injecting some isolation into the build would definitely help, but can’t be relied on long-term on macos. 2FA enforcement on all package repositories is long overdue and will fix a lot of the accidental malware, but not all. So we need more practical ideas and more protection layers until we’re ready to burn it all down and (for example) use nix with a full sandbox for the build processes.