The murder mystery

Recently I ran into an issue with pulseeffects getting killed without a clear reason. While the bug itself doesn’t seem super interesting, the deep dive into debugging it is blog-worthy.

Pulseeffects is a good app for adding filters to your audio streams and fixing some noise problems, especially when recording with sensitive mics.

Normally, when a process is killed, you’ll find some log entries, or some audit entries from your security module, or a coredump. But in this case, when the audio stream stopped, the process just got killed by… something?

Finding the killer

Normally to figure out why a process dies, I’d look at a coredump if one is produced. In this case it wasn’t, so it looks like the process was killed with a signal different than SIGSEGV - i.e. it wasn’t a crash. If it was, the crash would be mentioned in the journal and coredumpctl would know about it.

That means, the process was killed with a signal that’s either not handled, or one which cannot be caught. Running the program under a debugger (gdb /usr/bin/pulseeffects) confirms that the signal could not be caught and that I was looking at something sending SIGKILL.

Unfortunately I didn’t know where to look specifically…

Tracing the whole system

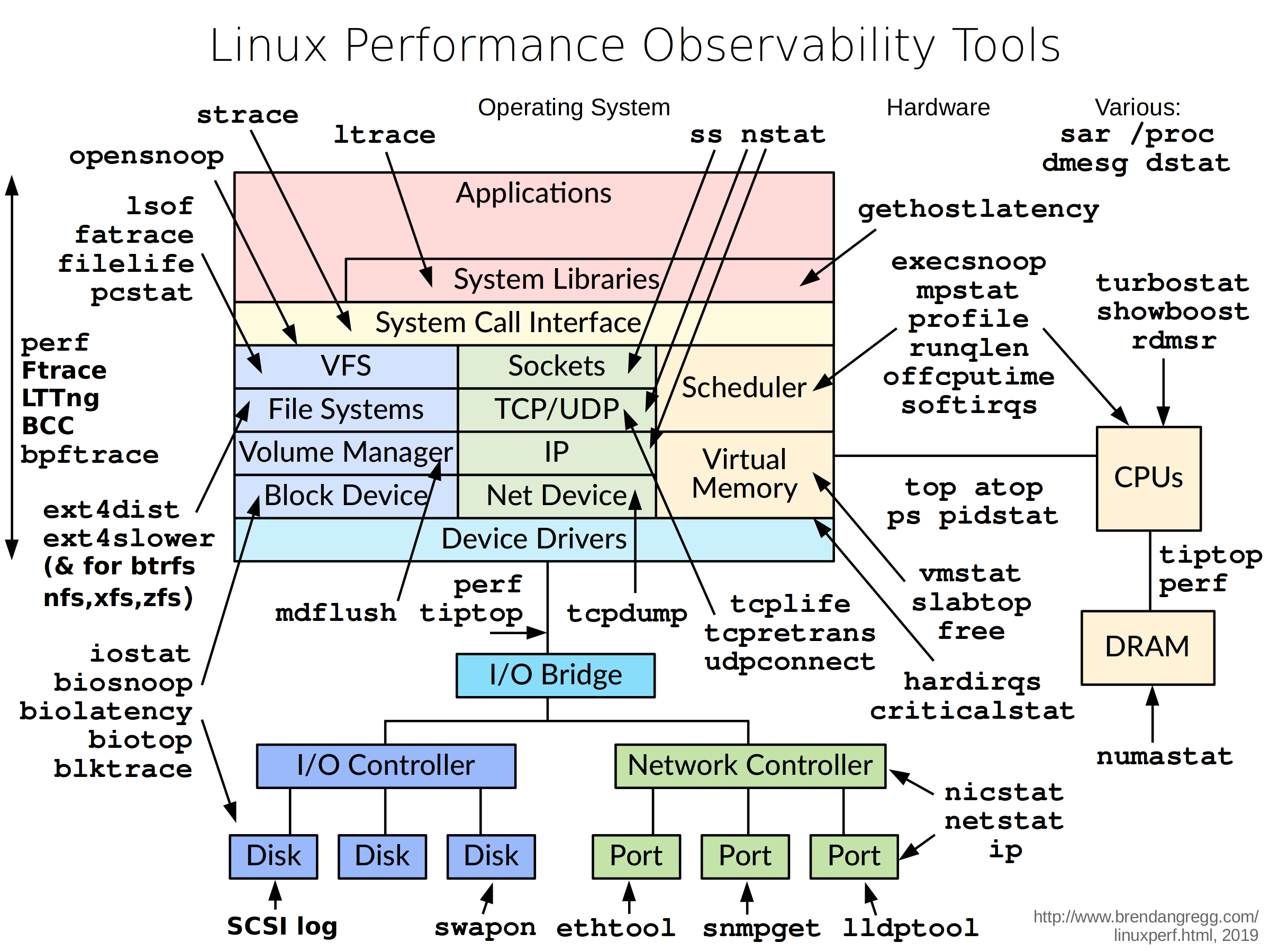

Recent Linux kernel (>= 4.x) allows using eBPF to record system-wide events and bpftrace is a nice Dtrace-like interface to it.. This means I could get more information about specific events without targetting a specific application. This came useful because I could look at where the signals come from. I’m not dealing with this layer too often, so I had to look through the list of all events to find something interesting:

bpftrace -l

...

tracepoint:signal:signal_generate

tracepoint:signal:signal_deliver

...

Turns out there are two events - one for the generating side and the other for delivery. I looked at both out of curiousity, but the generating side was the interesting one in this case. To find out who sent the signal, I ran:

bpftrace -e 'tracepoint:signal:signal_generate /args->sig == 9/

{ printf("%d %d %d %d\n%s\n%s\n", pid, tid, args->sig, args->errno, kstack, ustack); }'

This outputs some information about the source of the kill signal every time it happens anywhere in the system. That includes the kernel and user call stacks which will be useful for debugging later.

After reproducing the issue, it turns out that the signal originates from… pulseeffects itself!

(pulseeffects pid), (pulseeffects tid), 9, 0

(kstack here)

__send_signal+582

__send_signal+582

posix_cpu_timers_work+286

task_work_run+101

exit_to_user_mode_prepare+385

irqentry_exit_to_user_mode+5

asm_sysvec_apic_timer_interrupt+18

(ustack here)

00007fa23049447c

Reading the user stack

The userspace stack printed out by bpftrace is useful, but not immediately. On most systems in this decade the software will be compiled with as position independent (PIE) and will run on a system with address randomisation (ASLR) to prevent easy address guessing in exploits. This means each time the application is started, the addresses will be different and I couldn’t immediately map them to the right library / function.

There’s an easy way around this and a hard way. The hard way (possibly useful if you want to catch a very rare failure in production) is to extract the base address from the task itself, then save the process’s /proc/(pid)/map to find out which library it points to, then calculate the offset in the library and find the right function symbol. This is certainly doable and not super hard.

The easy way is to disable ASLR temporarily using sysctl (kernel.randomize_va_space=0), so I went with that instead. Now each time the address is consistent, so after getting it from bpftrace, I could start gdb /usr/bin/pulseeffects and check the address using info symbol 0x....:

(gdb) info symbol 0x00007fa23049447c

gtk_css_animated_style_create_css_transitions.constprop + 770 in section .text of /lib64/libgtk-3.so.0

Unfortunately each time the user address was different and most of the time pointed at things like gtk animation, which would never explicitly kill the application. That means the userspace code fault is not responsible for the issue.

Reading the kernel stack

Next to check is the kernel call stack. This one is automatically resolved to functions and offsets fortunately. It’s pretty clear that this code does end up with sending the signal and since that call doesn’t originate from userspace, it must be some condition in the kernel.

The first function below __send_signal is posix_cpu_timers_work and it’s part of the timer scheduling code. The source can be found at posix_cpu_timers_work and it seems to be a single call to another function. But that would normally take just a few bytes - how can we be over 200 bytes into that function and why doesn’t it call __send_signal? Turns out, a lot of the code in kernel is heavily inlined, which messes up the offsets really bad.

There are two paths from here and neither one is trivial. One is to disassemble the code of the interesting function and follow it, looking at the source code in parallel to note the interesting conditions and function calls to figure out what’s what. The other one is to install and use the debugging symbols, then use the source-annotated disassembly (gdb’s disassemble/m posix_cpu_timers_work). With multiple levels of inlining, this can get very confusing.

Choosing the second option (and scrolling past a few screens of complete garbage), I finally arrived at the matching offsets at:

bool check_rlimit(...) {

...

__group_send_sig_info(...)

}

The inlined stack was posix_cpu_timers_work -> handle_posix_cpu_timers -> check_thread_timers -> check_rlimit -> __group_send_sig_info (5 levels!).

So it looks like the process is killed by hitting some limits.

What actually happened

There’s a message the function can output about a “Watchdog Timeout”:

if (print_fatal_signals) {

pr_info("%s Watchdog Timeout (%s): %s[%d]\n",

rt ? "RT" : "CPU", hard ? "hard" : "soft",

current->comm, task_pid_nr(current));

}

So if the app is hitting that check, why didn’t I see the message?

A quick search shows that the print_fatal_signals flag is set via sysctl using:

sysctl kernel.print-fatal-signals=1

By default though, this flag is disabled, so we get a silent kill. Once it’s turned on, kernel log makes things much more clear:

kernel: RT Watchdog Timeout (hard): pulseeffects[22919]

Turns out that pulseeffects enabled both the soft and hard real-time rlimit settings. This unfortunately mean that if any action took more than 200ms without refreshing the watchdog, the app was killed immediately. And that seemed to happen in some cases when the audio stream ended.

Easier ways

Was there an easier way to find the answer? Sure, after looking at the bug I could search for “real-time SIGKILL” and find the answer. But that’s not as fun as debugging from “first principles” to learn some new things.